List<T> is one of the most versatile collection types in .NET. As it is meant for general-purpose use, it is not optimized for any specific use case. So, if we look closely enough, we will find scenarios where it falls short. One of these scenarios is when you have lots of data. This article will look at precisely this.

A small disclaimer

As many of my blog posts, this is meant for educational purposes. The code I will provide is (over)-simplified and will fall short in many scenarios.

The problem

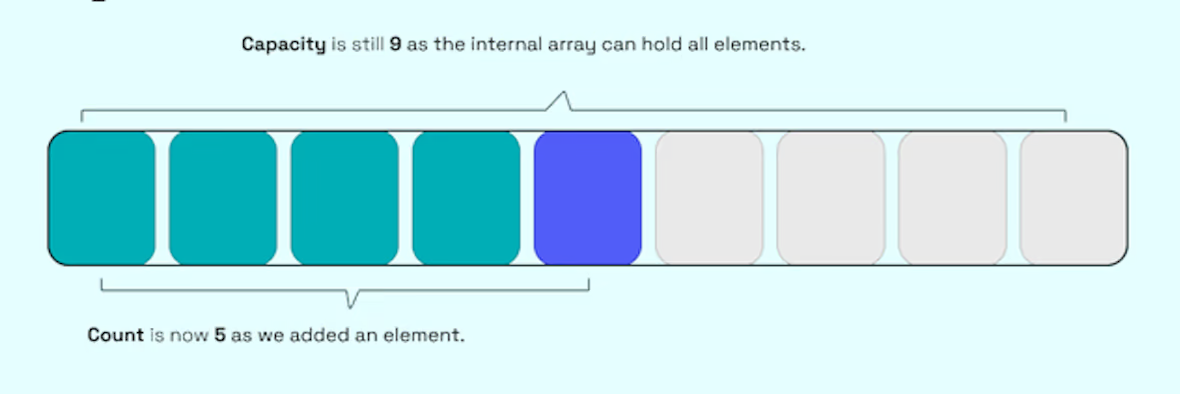

Under normal circumstances, List<T> is extremely fast and there might almost be no reason to switch to another collection type. But if we add enough elements to a List<T>, we potentially can degrade the performance of our application. This is because List<T> is implemented as an array. One single array. If you need a small refresher, I had a blog post illustrating how the list works. As we only have one array that grows offer time, we can hit a magic boundary of 85kb and our object lands on the Large Object Heap. For details, check out the two links I provided. The short version is that the LOH is not compacted and therefore can lead to fragmentation. This can lead to performance issues.

The solution

We don't have to look very far to find a possible solution. The .NET Framework itself has types that use chunking to keep objects quite small. Have a look at the StringBuilder. He has a chunk size of 8000 characters. Once you reach 8000 characters, a new array will be created and will be "attached" to the old one, kind of like a linked list. This way, we can avoid the LOH and keep our objects small. So let's implement this for our List<T>.

The code

public class ChunkedList<T>

{

private const int ChunkSize = 8000;

private T[][] _chunks;

public ChunkedList()

{

_chunks = new T[1][];

_chunks[0] = new T[ChunkSize];

Count = 0;

}

public T this[int index]

{

get

{

ArgumentOutOfRangeException.ThrowIfNegative(index);

ArgumentOutOfRangeException.ThrowIfGreaterThanOrEqual(index, Count);

var chunkIndex = index / ChunkSize;

var innerIndex = index % ChunkSize;

return _chunks[chunkIndex][innerIndex];

}

set

{

ArgumentOutOfRangeException.ThrowIfNegative(index);

ArgumentOutOfRangeException.ThrowIfGreaterThanOrEqual(index, Count);

var chunkIndex = index / ChunkSize;

var innerIndex = index % ChunkSize;

_chunks[chunkIndex][innerIndex] = value;

}

}

public void Add(T item)

{

if (Count == _chunks.Length * ChunkSize)

{

// Create a new larger set of chunks

var newChunks = new T[_chunks.Length + 1][];

Array.Copy(_chunks, newChunks, _chunks.Length);

newChunks[_chunks.Length] = new T[ChunkSize];

_chunks = newChunks;

}

var addToChunk = Count / ChunkSize;

var addToIndex = Count % ChunkSize;

_chunks[addToChunk][addToIndex] = item;

Count++;

}

public int Count { get; private set; }

public void Clear()

{

Count = 0;

}

}

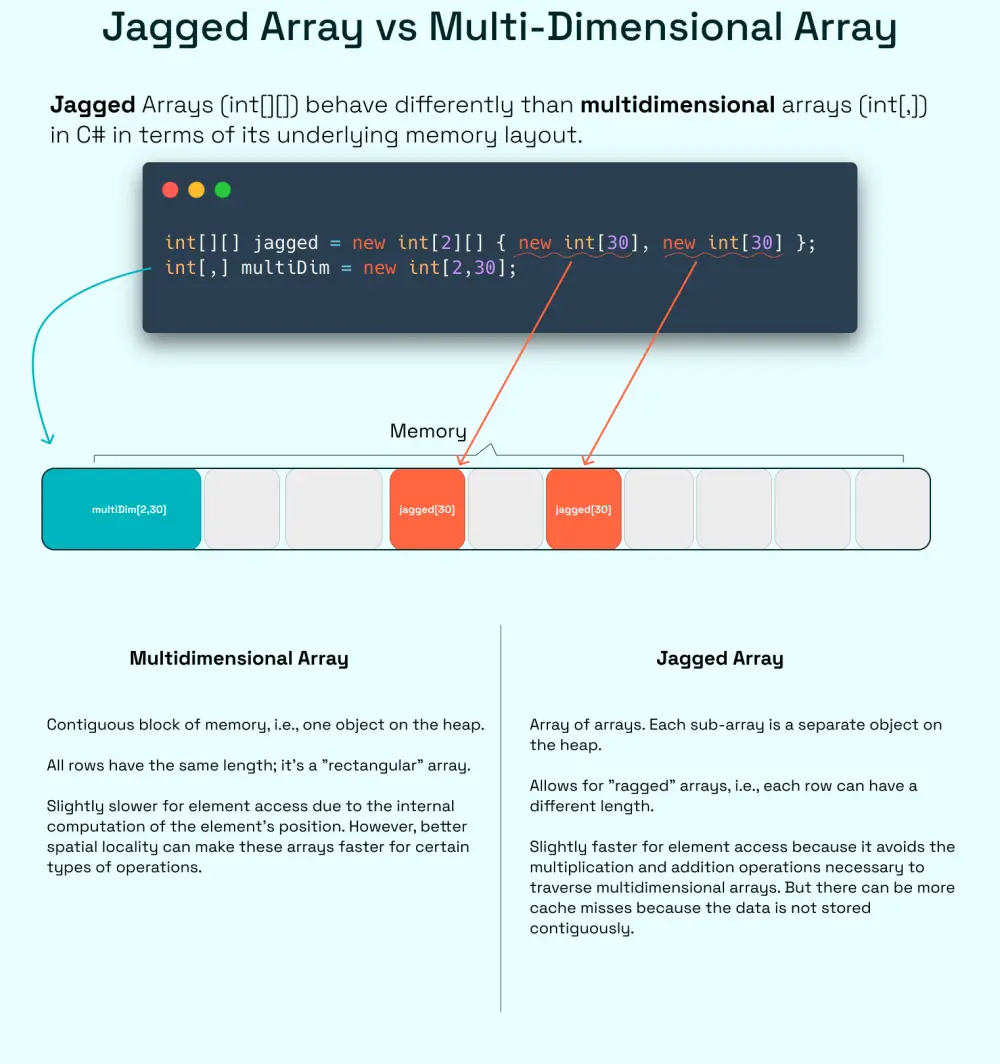

This is a very basic implementation - it doesn't even implement any interface! But the main point is that we are using a jagged array that has at most 8000 elements. Now wait, let's assume we have a ChunkedList<int> then one array inside that jagged array is at most 8000 elements * 4 byte (sizeof(int)) = 32kb - so if we have 3 of those aren't we over the 85kb? Technically yes, but jagged arrays are different than multi-dimensional arrays (int[,]). A jagged array is basically multiple arrays with mutliple addresses on the heap. Here a small graphic illustrating this:

Benchmark

Here the code (you can also find this at the end of this blog post):

[MemoryDiagnoser]

public class ListBenchmarks

{

[Params(1000, 20_0000)] public int Items { get; set; }

[Benchmark(Baseline = true)]

public List<int> List()

{

var list = new List<int>();

for (var i = 0; i < Items; i++)

{

list.Add(i);

}

return list;

}

[Benchmark]

public ChunkedList<int> ChunkedList()

{

var list = new ChunkedList<int>();

for (var i = 0; i < Items; i++)

{

list.Add(i);

}

return list;

}

}

| Method | Items | Mean | Error | StdDev | Ratio | RatioSD | Gen0 | Gen1 | Gen2 | Allocated | Alloc Ratio |

|------------ |------- |-----------:|----------:|----------:|------:|--------:|----------:|----------:|---------:|-----------:|------------:|

| List | 1000 | 1.075 us | 0.0156 us | 0.0146 us | 1.00 | 0.00 | 1.0052 | 0.0019 | - | 8.23 KB | 1.00 |

| ChunkedList | 1000 | 2.177 us | 0.0425 us | 0.0418 us | 2.02 | 0.05 | 3.8300 | - | - | 31.34 KB | 3.81 |

| | | | | | | | | | | | |

| List | 200000 | 375.556 us | 6.2286 us | 5.8263 us | 1.00 | 0.00 | 1398.4375 | 1398.4375 | 399.9023 | 2048.67 KB | 1.00 |

| ChunkedList | 200000 | 281.725 us | 2.2616 us | 2.1155 us | 0.75 | 0.01 | 95.7031 | 41.9922 | - | 784.99 KB | 0.38 |

What is very prominent is that for relatively small amounts of data, the List<T> is faster. This is because the ChunkedList<T> has some overhead that is only worth it for larger collections. And this is what we can see in the second benchmark. The ChunkedList<T> is faster than the List<T> and also uses less memory. And most importantly - there is no stuff on the LOH. Keep in mind that the ChunkedList<T> isn't optimized at all - so if you want, you can squeeze out some further performance.

Iterating through theChunkedList is, of course, linked to some overhead that has to calculate where the correct entry is. If we run a benchmark checking that, it becomes more obvious:

public class ForBenchmark

{

private readonly List<int> _list = new List<int>();

private readonly ChunkedList<int> _chunkedList = new ChunkedList<int>();

[GlobalSetup]

public void Setup()

{

_list.AddRange(Enumerable.Range(0, 20_000));

foreach (var item in _list)

{

_chunkedList.Add(item);

}

}

[Benchmark(Baseline = true)]

public int ForList()

{

var sum = 0;

for (var i = 0; i < _list.Count; i++)

{

sum += _list[i];

}

return sum;

}

[Benchmark]

public int ForChunkedList()

{

var sum = 0;

for (var i = 0; i < _chunkedList.Count; i++)

{

sum += _chunkedList[i];

}

return sum;

}

}

Results:

| Method | Mean | Error | StdDev | Ratio | RatioSD |

|--------------- |----------:|----------:|----------:|------:|--------:|

| ForList | 9.517 us | 0.0878 us | 0.0778 us | 1.00 | 0.00 |

| ForChunkedList | 22.239 us | 0.2017 us | 0.1887 us | 2.34 | 0.02 |

Conclusion

If you have a large amount of data that you want to store in a List<T> be aware of some implications a continuous growth of the list can have. If you want to avoid this, you can come around with your own implementation that knows how to handle things. These are also known as Frugal objects. A frugal object typically serves its purpose well without requiring frequent replacement or causing additional expenses. The bigger your code base the more likely it is that you have to roll out your custom implementations.