AI is a hot topic - one would say hype topic, and there are bold claims like this:

There Will Be No (Human) Programmers in Five Years

Source: https://decrypt.co/147191/no-human-programmers-five-years-ai-stability-ceo

Now, how far is this from reality? Let's take a look at the current state of AI in the context of programming.

Of course this isn't the only of such claims, we also have the current CEO of NVIDIA (Jensen Huang) saying:

Don’t learn to code

But is it really the case? To answer the question we have to understand how such claims are made and what they are based on. Spoiler-Alert: You are less impressed at the end! To make a bit more sense, let's have a look at the website of "Claude 3" from Anthropic:

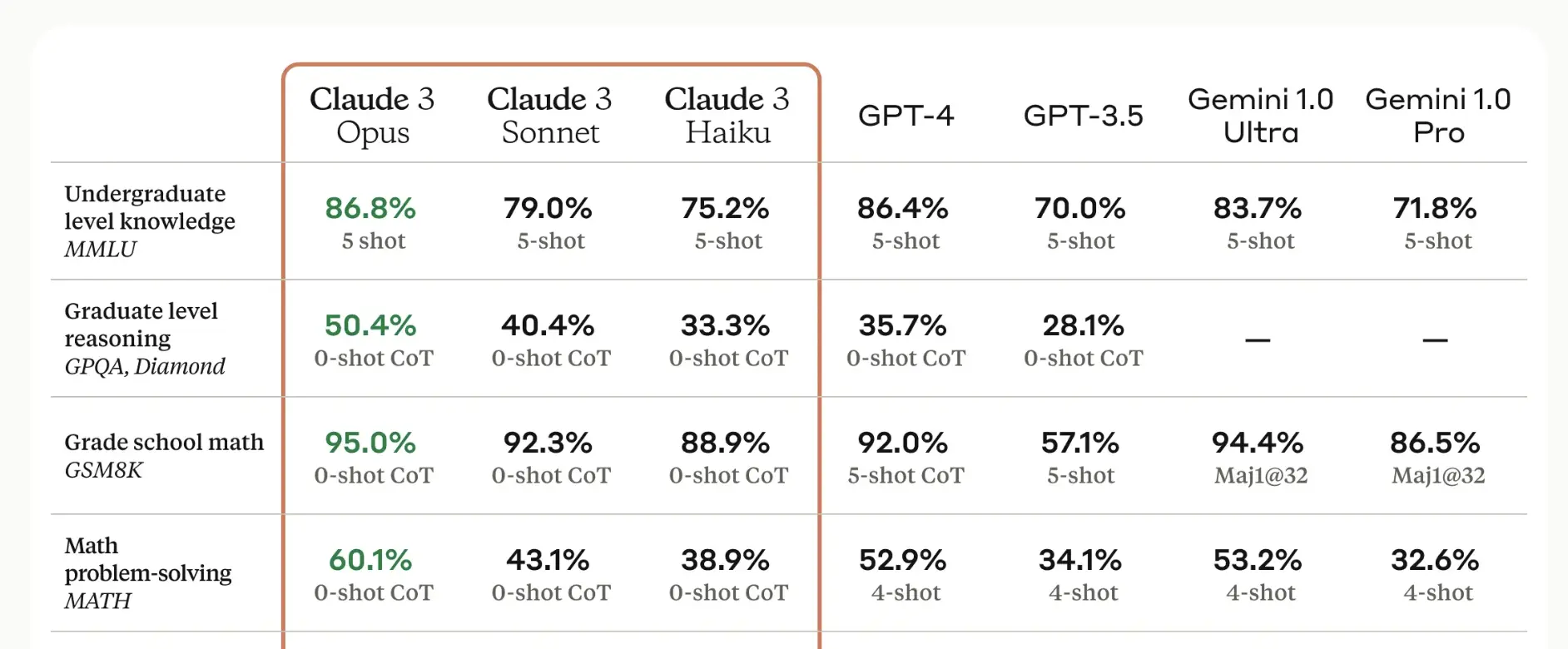

This image was taken on the 18th of April 2024

The compariosn gives you the feeling that, in this case Claude 3 Opus, is a very competent model surpassing GPT-4 in its programming capabilities. Or 84.9% in Coding "Human Eval". What is that in the first place? There are multiple source, so let's take some. Here the first one: https://arxiv.org/html/2402.14852v1

The task:

Given two positive integers a and b, return the even digits between a and b, in ascending order

So for generate_integers(2, 8) => [2, 4, 6, 8] or generate_integers(8, 2) => [2, 4, 6, 8] or generate_integers(10, 14) => []. That would be in the "easy" category of leetcode at best. And that is one of the standard benchmarks to evaluate the programming capabilities of a model. In Claude 3 opus (the best in this benchmark) has a confidence score of 85%. That means that 15% of the time it fails to solve this task.

By the way, I gave that task to ChatGPT-4 to solve it and it gave me this code:

using System;

using System.Collections.Generic;

class Program

{

static List<int> GenerateIntegers(int a, int b)

{

List<int> result = new List<int>();

// Ensure a <= b

int start = Math.Min(a, b);

int end = Math.Max(a, b);

// Iterate through the range from a to b

for (int num = start; num <= end; num++)

{

// Convert the number to a string to iterate through its digits

foreach (char digitChar in num.ToString())

{

// Parse the digit back to an integer

int digit = int.Parse(digitChar.ToString());

// Check if the digit is even

if (digit % 2 == 0)

{

result.Add(digit);

}

}

}

return result;

}

static void Main(string[] args)

{

// Test cases

List<int> result1 = GenerateIntegers(2, 8);

Console.WriteLine("Result for (2, 8): " + string.Join(", ", result1)); // Output: 2, 4, 6, 8

List<int> result2 = GenerateIntegers(8, 2);

Console.WriteLine("Result for (8, 2): " + string.Join(", ", result2)); // Output: 2, 4, 6, 8

List<int> result3 = GenerateIntegers(10, 14);

Console.WriteLine("Result for (10, 14): " + string.Join(", ", result3)); // Output:

}

}

Of course, it doesn't work. The implementation given is not correct. But sure, we are at the beginning of the journey. Models will get better for sure, but here are my two major problems.

1. Coding is a small part of your job

Actual coding does not fill all of your day! Not by a long shot. Code is basically the artifact/output of your thinking and conclusion process. So once you decided how to tackle the problem (going from problem space to solution space - so you did understand the problem and have a possible solution in mind), you can write the code. Of course it is not trivial to write code, but in many cases the "coding" part is easier than the thinking/drawing (not coding) part before.

For an AI to work, it has to understand the problem and the solution space. And that is the hard part. Often times you have to rely on context or domain knowledge to solve a problem. Concepts in one world/domain doesn't necessarily mean the same thing in another domain. For example given the word "table" in the context of a database, it doesn't mean the same as in the context of furniture. So context matters!

Will AI go there? Maybe!? Hard to tell - I am no AI expert.

2. Refactoring

Those models are great to spit out some code - but they lack the capability to refactor code. Given bigger chunks of code, it is in the majority of cases not able to do a proper refactoring. Well, you could argue you don't care because only the AI has to understand, but that is a bold claim to make. That would mean you give the AI the full responsibility to understand the code and the problem. And that is a big ask.

We all know that the more code you write the higher the chance of a bug (or multiple bugs). And that is true for AI models as well. The more complex an AI model is the more likely it becomes it has big flaws in its system. If you have a broken system to begin with, how likely will it be that it create something "not broken"?

Agents and other problems

The current pinicle of hype is or was "Devin". An agent that was able to write code, use the web, a shell and so on to reach its goal. There was even recently a video about (https://www.youtube.com/watch?v=UTS2Hz96HYQ) how it "can solve" a Upwork job. But without a big surprise, it seemed more staged than real. Here a nice video with more explanation

- https://www.youtube.com/watch?v=tNmgmwEtoWE

- The TL;DR Version: https://news.ycombinator.com/item?id=40008109

So - do I have a job in 5 years?

Yes. Software engineering isn't dead or in the hands of LLMs. The current state shows that there are still big gaps to overcome. Of course there will be layoffs because people believe the hype and think that they can substitute 3 developers with LLMs or agents. And that is my problem: The Hype

## The Hype If you read news on Social Media, people are directly hooked and hyped by such videos. Of course, the look cool and promising, but keep in mind such companies have to earn money! These are all promotinal! When was the last time you listed all your shortcomings in great detail on your CV? Oh you didn't? Hmm. That said - don't believe such hypes blindly.

I do feel we are somewhere at the peak of inflated expectations. Slowly but steadily, people realize that AI isn't the silver bullet. That doesn't mean AI can't do anything - of course, there are very valid use cases, for example, I myself use GitHub Copilot when coding. But it is a tool and not a replacement for me. It helps, for example, with simple mapping logic. It can create some scaffolding code quite easily!

So stay calm and don't believe the hype. People love to sell emotions - positive as well as negative ones.