If you were plowing a field, which would you rather use two strong oxen or 1024 chicken? - Seymour Cray

This describes more or less what we gonna see in a few minutes. But before let's have a look at a few things so we understand better what is going on.

GPGPU

GPGPU stands for general-purpose computing in graphics processing unit. It was created from the first shader languages like OpenGL. Those were more or less restricted to the graphics pipeline to multiply matrices or change colors of a pixel. GPGPU in contrast is way more general 😉. It is mosed used for simulations. The advantage of a GPU is that it has thousands of core. In contrast to a CPU they are just not powerful. So one calculations takes way longer, but you make thousands of them at a time. Flynn's classification labels this as SIMD (Single Instruction Multiple Data).

Now two points which are very important:

- The problem has to be parallelizable

- The problem should be "big" enough"

What do I mean with the second point? Well if you want to calculate stuff on your GPU you first have to send the stuff to your GPU and back. That is a trade-off you have to make. And sending data back and forth just takes time. So if you have a small problem the overhead of sending data around can be multiple magnitude bigger.

ILGPU

ILGPU is a JIT (just-in-time) compiler for high-performance GPU programs written in .Net-based languages. ILGPU is an open-source project hosted on github. It enables us to write C# code which gets executed on the GPU. And the best: You can debug your code via the integrated multi-threaded CPU accelerator. It also has a lot of algorithms out of the box shipped with the nuget package.

Let's Code - Matrix Multiplication

Before we go to the GPU part. Let's build up a reference with a native CPU version. We will take a very naive approach in both cases. For the simplicity our matrix will have the same dimension for x and y.

private static int[,] MultiplyMatrixHost(int[,] matrixA, int[,] matrixB)

{

var length = matrixA.GetLength(0);

var output = new int[length, length];

for (var i = 0; i < length; i++)

{

for (var j = 0; j < length; j++)

{

var sum = 0;

for (var k = 0; k < length; k++)

{

sum += matrixA[i, k] *matrixB[k, j];

}

output[i, j] = sum;

}

}

return output;

}

For simplicity I'll take a normal stopwatch but you also take a sophisticated library like Benchmark.Net

var matrixA = new int[1000, 1000];

var matrixB = new int[1000, 1000];

var s = Stopwatch.StartNew();

MultiplyMatrixHost(matrixA, matrixB);

Console.WriteLine("CPU C# naive: " + s.ElapsedMilliseconds);

GPU - Code

Let's add the package to our project:

PM > Install-Package ILGPU -Version 1.0.0-rc2

The first thing we have to do is creating a context:

using var ctx = Context.CreateDefault();

With this context we can now iterate through all devices. For the purpose of demonstration let us take the first device which is either capable of CUDA or OpenCL. Normally this is your graphics accelerator.

var gpuDevice = ctx.Devices.First(d => d.AcceleratorType == AcceleratorType.Cuda || d.AcceleratorType == AcceleratorType.OpenCL);

If you want to see the details you can call gpuDevice.PrintInformation();;. This will print out a lot of information about the accelerator, like how much memory or how many threads it can handle at a time. For my nVidia Quadro m1200

Device: Quadro M1200

Accelerator Type: Cuda

Cuda device id: 0

Cuda driver version: 11.4

Cuda architecture: SM_50

Instruction set: 7.4

Clock rate: 1148 MHz

Memory clock rate: 2505 MHz

Memory bus width: 128-bit

Warp size: 32

Number of multiprocessors: 5

Max number of threads/multiprocessor: 2048

Max number of threads/group: 1024

Max number of total threads: 10240

Max dimension of a group size: (1024, 1024, 64)

Max dimension of a grid size: (2147483647, 65535, 65535)

Total amount of global memory: 4294967296 bytes, 4096 MB

Total amount of constant memory: 65536 bytes, 64 KB

Total amount of shared memory per group: 49152 bytes, 48 KB

Total amount of shared memory per mp: 65536 bytes, 64 KB

L2 cache size: 2097152 bytes, 2048 KB

Important here are a few things:

- Max number of threads. This will give us the maximum degree of parallelism. Yes our GPU-cores are weaker than the CPU counterpart, but we have many of them

- Max dimension of grid size: We see that cores are structured in a grid. This is important because also such groups share memory. And accessing "shared group" memory is faster than the global one. It behaves the same with a CPU. You have L1, L2 and L3 cache. L1 is directly in the microprocessor, L2 outside and L3 which normally feeds the L2 cache. All of them are faster than accessing the main memory. Cache-Hits can be a major performance booster.

Now let's build our program which we will send to the GPU. Those programs are called "kernel".

var kernel = gpuDevice.LoadAutoGroupedStreamKernel<

Index2D,

ArrayView2D<int, Stride2D.DenseX>,

ArrayView2D<int, Stride2D.DenseX>,

ArrayView2D<int, Stride2D.DenseX>>(

MatrixMultiplyNaiveKernel);

The first parameter defines the structure of our grid on the GPU. Remember earlier as I said that we lay out the GPU cores in a grid. Here we defined how it is laid out. The next 3 parameters describe a 2D-Array. So the first two will be our two input matrices and the last one is the output. The last one is our program, which will be executed on the GPU. So let's define this:

private static void MatrixMultiplyNaiveKernel(Index2D index, ArrayView2D<int, Stride2D.DenseX> matrixA, ArrayView2D<int,Stride2D.DenseX> matrixB,

ArrayView2D<int, Stride2D.DenseX> output)

{

var x = index.X;

var y = index.Y;

var sum = 0;

for (var i = 0; i < matrixA.IntExtent.Y; i++)

{

sum += matrixA[new Index2D(x, i)] * matrixB[new Index2D(i, y)];

}

output[index] = sum;

}

Now that looks way slimer than the CPU code, doesn't it? And the reason is simple. Each thread is responsible only for one cell in the output matrix. Here you can see the power of the GPU. We have a lot of threads to utilize. So our kernel just created the sum from the x-th row of matrix A and the y-column of matrix B and puts it into the output array. Pretty convenient?

Now, what is missing? We have to pass data to the graphic card and get the data back once we are finished. Now it get's a bit C-like

var length = matrixA.GetLength(0);

using var mA = gpuDevice.Allocate2DDenseX<int>(new Index2D(length, length));

using var mB = gpuDevice.Allocate2DDenseX<int>(new Index2D(length, length));

using var mC = gpuDevice.Allocate2DDenseX<int>(new Index2D(length, length));

mA.CopyFromCPU(matrixA);

mB.CopyFromCPU(matrixB);

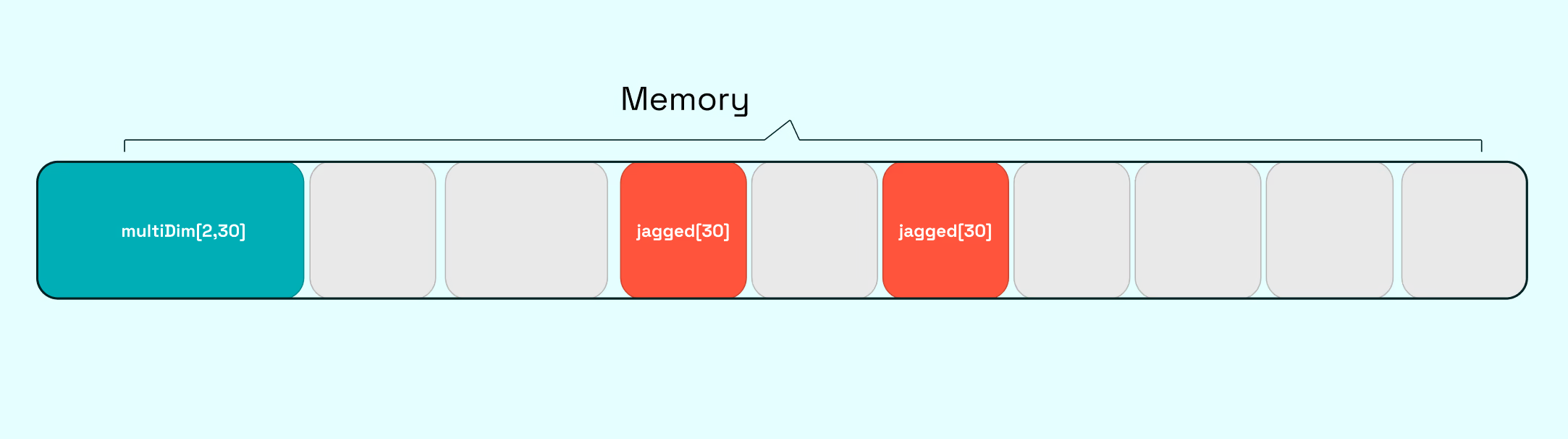

First we have to allocate memory on the GPU. Those buffers are 2D (remember our matrices will always be n x n in dimension). CopyFromCpu does exactly what it says. Send our data from the HOST RAM to the GPU RAM.

Now only execution and returning the data is missing:

kernel(mC.Extent.ToIntIndex(), mA.View, mB.View, mC.View);

int[,] returnedArray = mC.GetAsArray2D();

kernel will really execute our code and we will also pass the layout to the kernel. Afterwards with GetAsArray2D() we pass pass back the data from the GPU to the CPU / RAM. Initially I said to you that you need a specific problem size so you can "hide" this time sending data from A to B and back.

That is all for now. There are some advanced layout like tiling which would improve your timing even further. But there also pitfalls like branching. This will follow in detail in another post. But for now a small hint: SIMD 😉 Single instruction multiple data. If we diverge and use a different "instruction" then we have to wait until one branch is done until we can start the other branch, because the other branch has a different operation. This can limit your degree of parallelism by a lot.

Performance

Let's make some comparisons: We will run the naive multiplication with different sized matrices. For the GPU we will take also the transport into account.

| 10x10 Matrix | 100x100 Matrix | 500x500 Matrix | 1000x1000 Matrix | 1500x1500 Matrix | |

|---|---|---|---|---|---|

| CPU | < 0 ms | 6ms | 1061ms | 8756ms | 39910ms |

| GPU | 560ms | 590ms | 610ms | 680ms | 945ms |

| Speedup | 0.00 | 0.01 | 1.73 | 12.88 | 42.2 |

I know the comparison is not the fairest. I could also use TPL in C# 😉 I might cover this also in a later blog post.

Debugging

There are two main ways to debug your application which are supported out of the box from ILGPU. Either via writing to the Host console or directly debugging the code.

Writing to the Host Console

This options works with all devices. So it doesn't matter if you are really running your code on your GPU or on the emulated device which runs on the CPU. You can easily print out stuff like this:

private static void MatrixMultiplyNaiveKernel(Index2D index, ArrayView2D<int, Stride2D.DenseX> matrixA, ArrayView2D<int,Stride2D.DenseX> matrixB,

ArrayView2D<int, Stride2D.DenseX> output)

{

var x = index.X;

var y = index.Y;

Interop.WriteLine("x: {0}", x);

Interop.WriteLine("y: {0}", y);

Interop.WriteLine("");

Not all string operations are supported. Concatenating your string with the + will lead to an exception. string.Format seems to work just fine.

Please note also the following will not work:

Interop.WriteLine($"x: {x}");

Interop.WriteLine($"y: {y}");

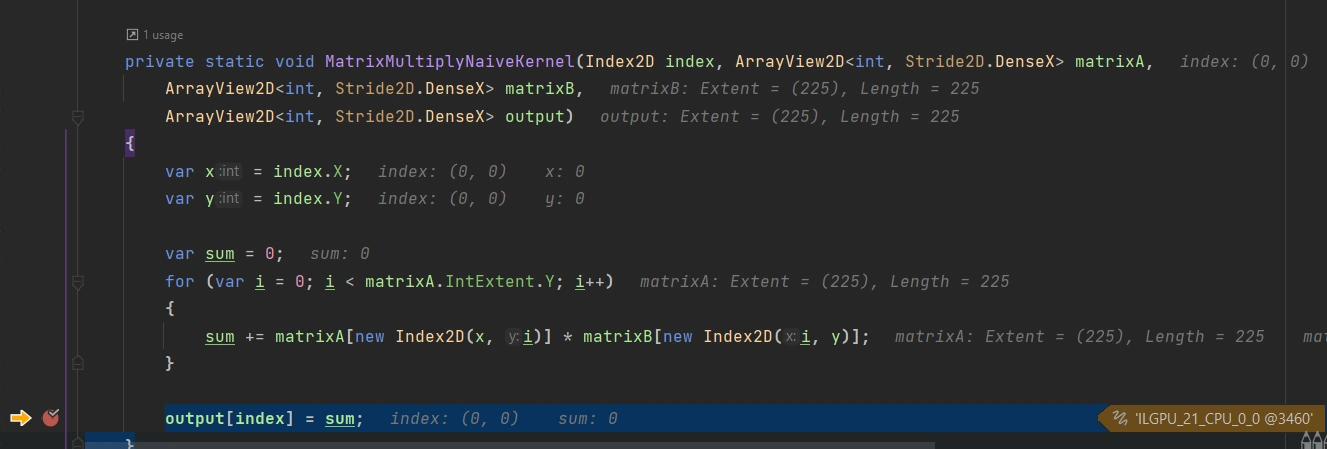

Debugging

At the moment (1.0.0-rc.2) debugging via your IDE is not working on the GPU, but they are working on it. To debug your code use the CPU accelerator instead of CUDA or OpenCL.

var cpuDevice = ctx.Devices.Single(d => d.AcceleratorType == AcceleratorType.CPU);

The result looks like this:

Result

This is the first introduction to ILGPU. There are a lot of samples in their sample repository which you can find here. If you want to see more, let me know