I will drop the basics about what an unsinged integer is. Let's just concentrate on the fact whether or not you should use it.

The obvious case would be as Count property on your array-like structures or lists. That thing can't get smaller than zero.

Let's have a look at a few things, shall we?

Common Language Specification aka CLS

Let's start with the CLS. In the CLS there is no datatypes which present unsigned values. That means if you write a library in C# where those types exist, you will get big problem in certain languages in the .NET ecosystem. But honestly who cares about CLS-compliant when >90% is C# and you are sure it will only be consumed from C# code.

Can an array have a negative length?

Well no... but technically yes. There are some reasons Array.Length is int32

- .NET 1 only allowed objects the size of 2GB thus

int32was good enough - Interop with other languages needs int anyway

- -1 as often used as a marker For example binary search returns -1 when a value wasn't found (good that -1 can't be an useful length)

- Wrap around behavior of uint is dangerous

By the way if you want to see an negative (valid) index C/C++ comes to the rescue with pointers 😄 `

int a[3] = { 1, 2, 3 };

int* p = &a[1]; // Make p point to the second element

std::cout << p[-1]; // Prints the first element of a, equal to *(p - 1)

std::cout << p[ 0]; // Prints the second element of a, equal to *p

std::cout << p[ 1]; // Prints the third element of a, equal to *(p + 1)

Wrap around behavior of uint

uint a = uint.MaxValue + 1;

Console.Write("a is " + a); // a is 0

What happens is basically any arithemtic behaves like a ring. Just imagine the following arithemtic operation:

uint a = 100u;

uint b = 100u;

uint c = a * b;

What really happens is that:

uint a = 100u;

uint b = 100u;

uint c = (a * b) % 2^32; // Modulo 2^32

Okay this brings big issues for example if you want to an access an array: uint arrayIndex = 0; MyArray[arrayIndex - 1]; Now you would access uint.MaxValue. Most likely there is nothing but given the probability you will run into issues.

This is how uint behaves in C/C++. And this is the base for the .NET Runtime.

Microsoft was at least very nice and provided us the checked keyword to not fall into this issue.

For Loops

Let's have a look at the following code-snippet:

for (unsigned int i = 9; i >= 0; i--)

{

Console.WriteLine("Hello World");

}

How often do you think the loop will run? ... The answer is indefinitely. Why? Because of the wrap around. An uint can't get smaller than 0, therefore i >= 0 is a tautology and therefore always true.

Complex arithmetic

Just image you mix unsinged and signed in formula. Just have a look at this: Preserving rules in C. There can be tricky tricky pitfalls when mixing both.

So should I never use it?

No. There are reasons to use an uint but it should not be because of readability. In most cases int is big enough and economically.

Bit Shifting

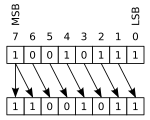

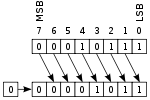

In .NET signed and unsinged integer behave differently when it comes to bit-shifts. Signed integers doing arithemtic shifts:

And unsigned integers do logical shifts:

If you need logical bitshifts you would take uints.

Interop

From personal experience I can say that a lot of stuff which is close to the hardware still uses uint. For example OpenCL or nVidia's CUDA. If you ever tried the same in JAVA it is a bit pain in the a** as Java has no unsinged data types.

Conclusion

There are cases where an uint makes sense. But in your every day life you are safer with a signed integer.